After struggling to recover a moderately important VM on one of my home lab servers running generic CentOS libvirt, a colleague suggested I investigate ProxMox as a replacement to libvirt since it offers some replication and clustering features. The test was quick and I was very impressed with the features available in the community edition. It took maybe 15-30 minutes to install and get my first VM running. I quickly rolled ProxMox out on my other two lab servers and started experimenting with replication and migration of VMs between the ProxMox cluster nodes.

The recurring pain I was experiencing with VM hosts centered around primarily failed disks, both HDD and SSD, but also a rare processor failure. I had already decided to invest a significant amount of money into a commercial NAS (one of the major failures was irrecoverability of a TrueNAS VM with some archive files). Although investing in a QNAP or Synology NAS device would introduce a single point of failure for all the ProxMox hosts, I decided to start with one and see if later I could justify the cost for a redundant QNAP. More on that in another article.

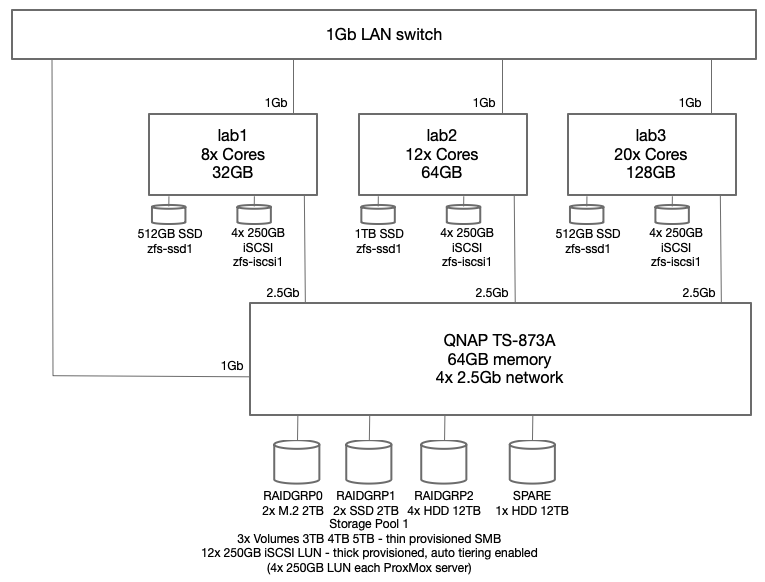

The current architecture of my lab environment now looks like this:

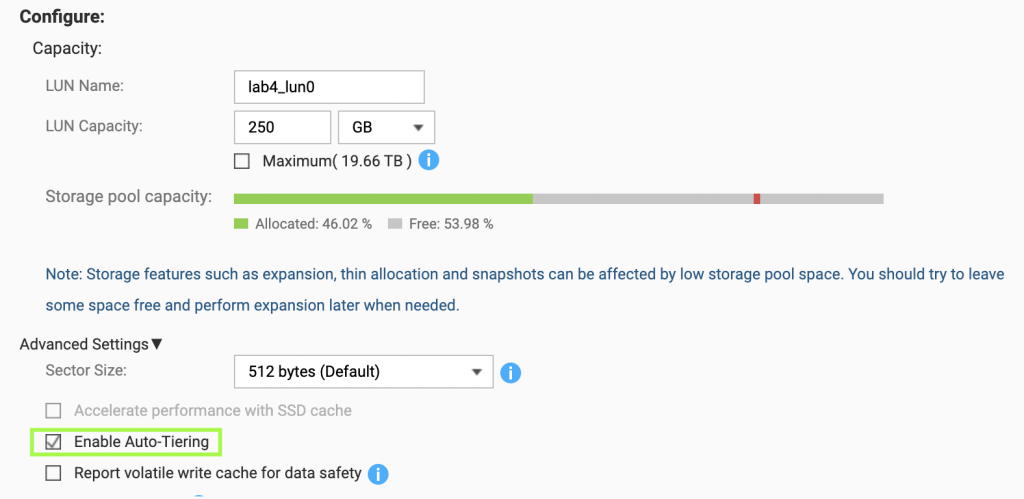

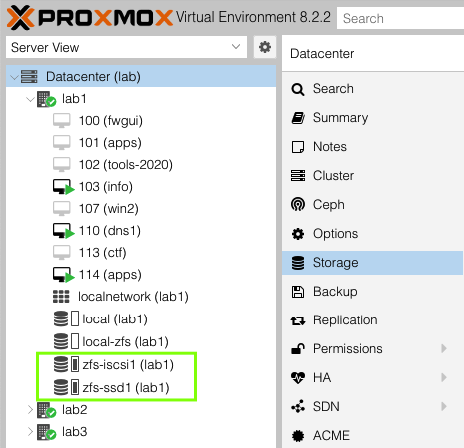

To reduce the complexity, I chose to setup ProxMox for replication of VM guests and allow live migration but not to implement HA clustering yet. To support this configuration, the QNAP NAS device is configured to advertise a number of iSCSI LUNs, each with a dedicated iSCSI target hosted on the QNAP NAS system. Through trial and error testing I decided to configure four (4) 250GB LUNs for each ProxMox host. All four (4) of those LUNs are added into a new ZFS zpool making 1TB of storage available to each ProxMox host. Since this iteration of the design is not going to use shared cluster aware storage, each host has a dedicated 1TB ZFS pool (zfs-iscsi1) however each pool is named the same to facilitate replication from one ProxMox host to another. For higher performance requirements, I also employ a single SSD on each host which have also been placed into a ZFS pool (zfs-ssd1) named the same on each host.

A couple of notes on architecture vulnerabilities. Each ProxMox host should have dual local disks to allow ZRAID1 mirroring. I chose to have only single SSD in each host to start with and tolerate a local disk failure – replication will be running on critical VM to limit the loss in the case of a local SSD failure. Any VM that cannot tolerate any disk failure will only use the iSCSI disks.

Setup ProxMox Host and Add ProxMox Hosts to Cluster

- Server configuration: 2x 1TB HDD, 1x 512GB SSD

- Download ProxMox install ISO image and burn to USB

Boot into the ProxMox installer

Assuming the new host has dual disks that can be mirrored, chose Advanced for the boot disk and select ZRAID1 – this will allow you to select the two disks to be mirrored

Follow the installation prompts and sign in on the console after the system reboots

- Setup the local SSD as ZFS pool “zfs-ssd1”

Use lsblk to identify local disks attached to find the SSD

lsblk

sda 8:0 0 931.5G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 930.5G 0 part

sdb 8:16 0 476.9G 0 disk

sdc 8:32 0 931.5G 0 disk

├─sdc1 8:33 0 1007K 0 part

├─sdc2 8:34 0 1G 0 part

└─sdc3 8:35 0 930.5G 0 part Clear the disk label if any and create empty GPT

sgdisk --zap-all /dev/sdb

sgdisk --clear --mbrtogpt /dev/sdbCreate ZFS pool with the SSD

zpool create zfs-ssd1 /dev/sdb

zpool list

NAME SIZE ALLOC FREE ... FRAG CAP DEDUP HEALTH

rpool 928G 4.44G 924G 0% 0% 1.00x ONLINE

zfs-ssd1 476G 109G 367G 1% 22% 1.00x ONLINEUpdate /etc/pve/storage.cfg and ensure ProxMox host is listed as a node for zfs-ssd1 pool. Initial entry can only list the first node. When adding another ProxMox host, the new host gets added to the nodes list.

zfspool: zfs-ssd1

pool zfs-ssd1

content images,rootdir

mountpoint /zfs-ssd1

nodes lab2,lab1,lab3Note the /etc/pve files are maintained in a global filesystem and any edits while on one host will reflect on all other ProxMox cluster nodes.

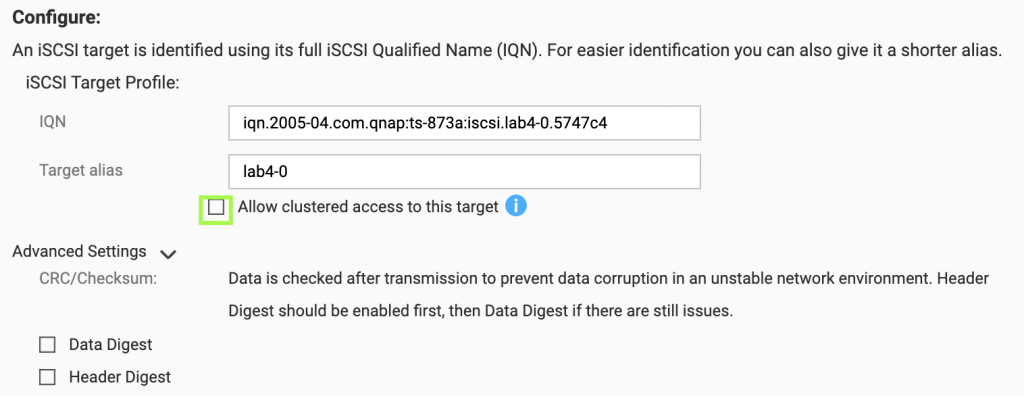

- Configure QNAP iSCSI targets with attached LUNs

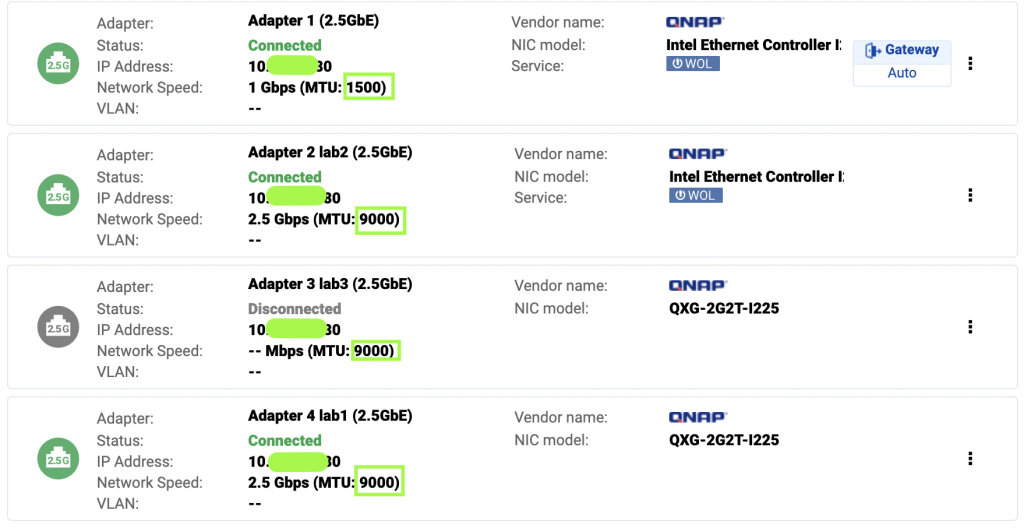

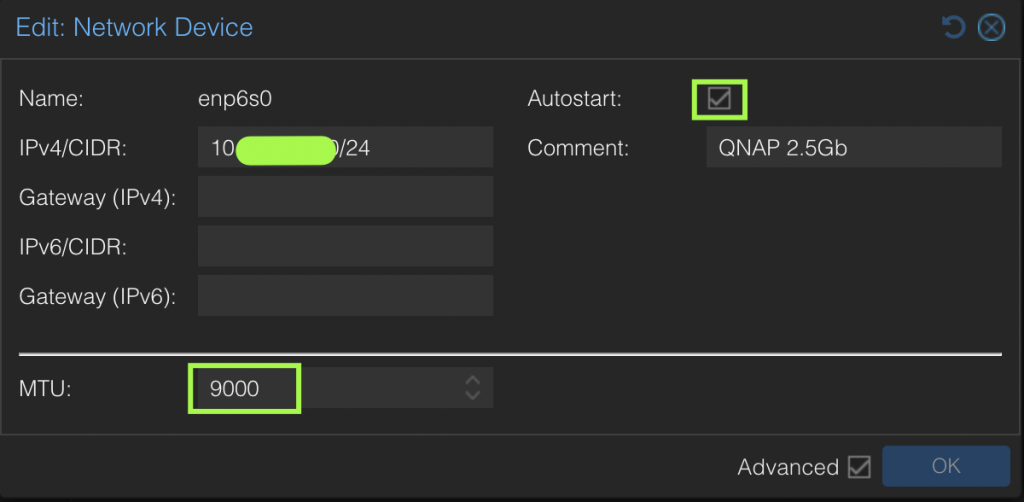

- Configure network adapter on ProxMox host for the direct connection to QNAP, ensure MTU is set 9000 and speed 2.5Gb

- Setup iSCSI daemon and disks for creation of zfs-iscsi1 ZFS pool

Update /etc/iscsi/iscsid.conf to setup automatic start, CHAP credentials

cp /etc/iscsi/iscsid.conf /etc/iscsi/iscsid.conf.orig

node.startup = automatic

node.session.auth.authmethod = CHAP

node.session.auth.username = qnapuser

node.session.auth.password = hUXxhsYUvLQAR

chmod o-rwx /etc/iscsi/iscsid.conf

systemctl restart iscsid

systemctl restart open-iscsiValidate connection to QNAP, ensure no sessions exist do discovery of published iSCSI targets. Ensure to use the high speed interface address of the QNAP.

iscsiadm -m session -P 3

No active sessions

iscsiadm -m discovery -t sendtargets -p 10.3.1.80:3260

10.3.1.80:3260,1 iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-0.5748c4

10.3.5.80:3260,1 iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-0.5748c4

10.3.1.80:3260,1 iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-1.5748c4

10.3.5.80:3260,1 iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-1.5748c4

10.3.1.80:3260,1 iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-2.5748c4

10.3.5.80:3260,1 iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-2.5748c4

10.3.1.80:3260,1 iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-3.5748c4

10.3.5.80:3260,1 iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-3.5748c4In the output of the discovery it appears there are two sets of targets. This is due to multiple network adapters under Network Portal on the QNAP being included in the targets. We will use the high speed address (10.3.1.80) for all the iscsiadm commands.

Execute login to each iSCSI target

iscsiadm -m node -T iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-0.5748c4 -p 10.3.1.80:3260 -l

Logging in to [iface: default, target: iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-0.5748c4, portal: 10.3.1.80,3260]

Login to [iface: default, target: iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-0.5748c4, portal: 10.3.1.80,3260] successful.

iscsiadm -m node -T iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-1.5748c4 -p 10.3.1.80:3260 -l

Logging in to [iface: default, target: iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-1.5748c4, portal: 10.3.1.80,3260]

Login to [iface: default, target: iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-1.5748c4, portal: 10.3.1.80,3260] successful.

iscsiadm -m node -T iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-2.5748c4 -p 10.3.1.80:3260 -l

Logging in to [iface: default, target: iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-2.5748c4, portal: 10.3.1.80,3260]

Login to [iface: default, target: iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-2.5748c4, portal: 10.3.1.80,3260] successful.

iscsiadm -m node -T iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-3.5748c4 -p 10.3.1.80:3260 -l

Logging in to [iface: default, target: iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-3.5748c4, portal: 10.3.1.80,3260]

Login to [iface: default, target: iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-3.5748c4, portal: 10.3.1.80,3260] successful.Verify iSCSI disks were attached

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 931.5G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 930.5G 0 part

sdb 8:16 0 476.9G 0 disk

├─sdb1 8:17 0 476.9G 0 part

└─sdb9 8:25 0 8M 0 part

sdc 8:32 0 931.5G 0 disk

├─sdc1 8:33 0 1007K 0 part

├─sdc2 8:34 0 1G 0 part

└─sdc3 8:35 0 930.5G 0 part

sdd 8:48 0 250G 0 disk

sde 8:64 0 250G 0 disk

sdf 8:80 0 250G 0 disk

sdg 8:96 0 250G 0 diskCreate GPT label on new disks

sgdisk --zap-all /dev/sdd

sgdisk --clear --mbrtogpt /dev/sdd

sgdisk --zap-all /dev/sde

sgdisk --clear --mbrtogpt /dev/sde

sgdisk --zap-all /dev/sdf

sgdisk --clear --mbrtogpt /dev/sdf

sgdisk --zap-all /dev/sdg

sgdisk --clear --mbrtogpt /dev/sdgCreate ZFS pool for iSCSI disks

zpool create zfs-iscsi1 /dev/sdd /dev/sde /dev/sdf /dev/sdg

zpool list

NAME SIZE ALLOC FREE ... FRAG CAP DEDUP HEALTH

rpool 928G 4.44G 924G 0% 0% 1.00x ONLINE

zfs-ssd1 476G 109G 367G 1% 22% 1.00x ONLINE

zfs-iscsi1 992G 113G 879G 0% 11% 1.00x ONLINESetup automatic login on boot for iSCSI disks

iscsiadm -m node -T iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-0.5748c4 -p 10.3.1.80 -o update -n node.startup -v automatic

iscsiadm -m node -T iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-1.5748c4 -p 10.3.1.80 -o update -n node.startup -v automatic

iscsiadm -m node -T iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-2.5748c4 -p 10.3.1.80 -o update -n node.startup -v automatic

iscsiadm -m node -T iqn.2005-04.com.qnap:ts-873a:iscsi.lab1-3.5748c4 -p 10.3.1.80 -o update -n node.startup -v automaticUpdate /etc/pve/storage.cfg for the zfs-iscsi1 ZFS pool to show up in the ProxMox GUI. Initial entry can only list the first node. When adding another ProxMox host, the new host gets added to the nodes list.

zfspool: zfs-iscsi1

pool zfs-iscsi1

content images,rootdir

mountpoint /zfs-iscsi1

nodes lab2,lab1,lab3

Next I will cover the configuration of VM for disk replication across one or more ProxMox hosts in this article How to Configure ProxMox VMs for Replication